Machine Learning Fundamentals By Milly Kc

$39.00

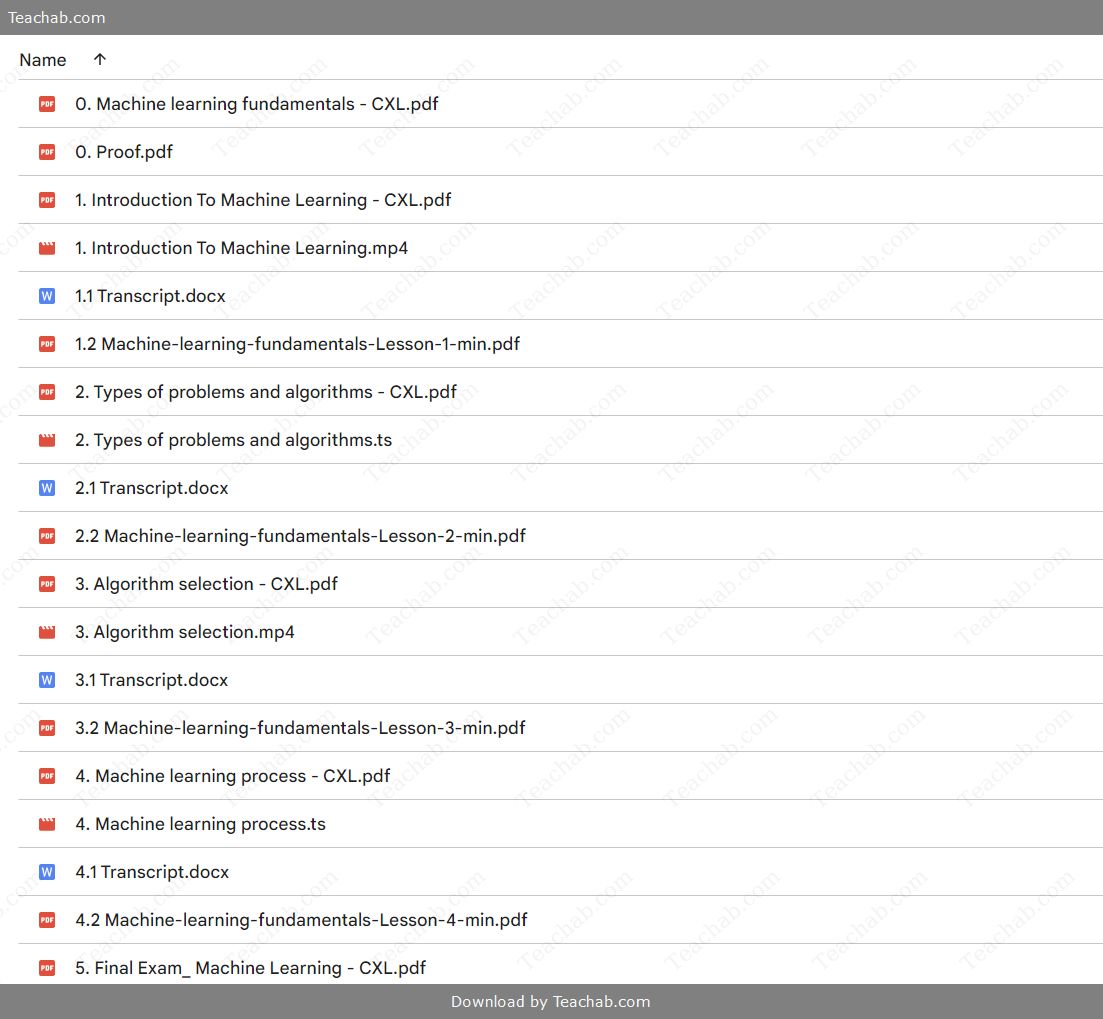

Machine Learning Fundamentals By Milly Kc – Digital Download!

Content Proof:

Machine Learning Fundamentals by Milly KC

Machine learning (ML) has become an integral part of today’s technological landscape, influencing various sectors and redefining how we approach problem-solving across industries. As algorithms evolve and data proliferate, understanding the fundamentals of ML allows professionals to leverage these technologies to improve decision-making, enhance automation, and foster innovation. Milly KC’s work on machine learning fundamentals serves as an essential resource for anyone looking to grasp the essential concepts and applications of this rapidly advancing field.

From understanding the principles of supervised and unsupervised learning to exploring intricate algorithms, the insights within this framework equip learners with the tools needed for practical application and future exploration. As we dive deeper into machine learning fundamentals, we will uncover vital concepts, techniques, and real-world applications that demonstrate the power and versatility of machine learning solutions, illuminating the path for seasoned practitioners and newcomers alike.

Key Concepts in Machine Learning

To grasp the essence of machine learning, one must familiarize themselves with several key concepts that form the bedrock of this field. At the core of it lies the distinction between supervised, unsupervised, and reinforcement learning.

Supervised learning can be likened to a student learning from a teacher. Here, data labeled with correct answers enable algorithms to learn and improve over time. Conversely, unsupervised learning involves finding patterns or groupings in unlabeled data, akin to a student discovering concepts without guidance. Reinforcement learning simulates trial and error, rewarding correct actions while penalizing errors, similar to how humans learn through experience.

Additionally, the importance of data cannot be overstated data quality and quantity greatly influence model performance. High-quality datasets provide cleaner inputs for training algorithms, enhancing their predictive capabilities. Furthermore, as machine learning systems evolve, they increasingly rely on complex algorithms that process vast amounts of data. Understanding algorithm types such as decision trees, support vector machines, and neural networks permits more effective model selection tailored to specific problems.

Lastly, it is vital to grasp concepts like feature selection and engineering, as these processes focus on identifying the most relevant aspects of data that contribute to successful learning outcomes. Continuous innovation and exploration of these concepts equip learners to navigate the dynamic landscape of machine learning effectively.

Supervised Learning Techniques

Supervised learning techniques stand as one of the most widely used approaches in machine learning, primarily due to their straightforward framework. The methodology involves feeding labeled datasets to an algorithm, enabling it to learn from given input-output pairs. This facet turns supervised learning into a familiar teaching format where the algorithm is trained to relate features to outcomes in a manner analogous to how a student learns complex concepts from a teacher’s guidance.

One central element of supervised learning is model training, which occurs through multiple iterations where the algorithm adjusts its internal parameters. The quality of predictions hinges on properly tuning these parameters during training, akin to a student refining skills through practice. Thus, the model can improve its accuracy over time, gaining essential insights from learned patterns.

Errors and misclassifications during training are evaluated using a loss function an essential metric that quantifies the difference between predicted and actual values. Minimizing this loss is the primary objective during training. Additionally, successful training fosters generalization, enabling the model to perform well on unseen data. Techniques like cross-validation and regularization are employed to prevent overfitting, ensuring the model captures broader trends rather than mere noise.

Supervised learning encompasses various algorithms used in regression and classification tasks. For instance, regression techniques like linear regression predict continuous outcomes, while classification algorithms such as logistic regression and decision trees can categorize data into distinct classes. These algorithms collectively empower data scientists to tackle complex issues across areas like finance, healthcare, and marketing.

Unsupervised Learning Methods

Unsupervised learning methods explore the vast realms of unlabeled datasets to unveil hidden patterns or relationships without guidance. Imagine a researcher exploring a new territory without any prior maps this is the essence of unsupervised learning, where algorithms sift through raw data to derive meaningful insights.

At the forefront of unsupervised learning is clustering, a technique that groups similar data points based on inherent characteristics. Popular algorithms, such as k-means and hierarchical clustering, allow users to categorize data into distinct clusters automatically. This approach has vast implications, ranging from market segmentation in business to image analysis in healthcare.

Another compelling aspect of unsupervised learning is dimensionality reduction, an important technique tailored for simplifying datasets while preserving critical information. Utilized methods like Principal Component Analysis (PCA) help eliminate noise and irrelevant features, making it easier to visualize complex data while retaining significant patterns. This capability greatly enhances model performance and interpretability.

Anomaly detection is an additional vital application of unsupervised learning techniques, enabling the identification of unexpected outliers or anomalies within datasets. Businesses leverage this capability to detect fraudulent transactions or monitor system health, ensuring data integrity.

Association rule learning also plays a crucial role by uncovering interesting relationships between variables in large databases. It enables organizations to derive insights, such as identifying products often bought together, enhancing marketing strategies through effective product recommendations.

Reinforcement Learning Principles

Reinforcement learning (RL) presents a unique approach to machine learning where agents interact with their environment to learn optimal actions through trial and error, effectively mimicking human learning. This paradigm can be illustratively compared to training a puppy where repeated actions are rewarded or corrected based on the response, guiding the puppy toward desired behaviors.

At the core of RL are concepts like the agent, which performs actions within the environment, and the policy, signifying a strategy that determines the agent’s actions based on the current situation. The outcomes of these actions are assessed through a reward signal, incentivizing agents to adopt actions that maximize cumulative rewards over time.

Much like balancing exploration and exploitation when learning a new game, RL necessitates careful consideration of the exploration vs. exploitation dilemma. Agents must strike a balance between exploring new strategies to seek greater rewards and exploiting known strategies that yield satisfactory outcomes. This duality enhances decision-making in dynamic environments, particularly in real-time applications such as robotics and gaming.

A vital component of RL is the value function, which estimates the potential reward of particular actions or states. This function provides essential insights that guide agents toward optimal behavior in diverse scenarios.

Through these principles, reinforcement learning has paved the way for significant advancements in various fields, including robotics, game playing, and autonomous systems. By learning from real-time interactions, agents continuously adapt to their environments, pushing the boundaries of intelligent automation.

Feature Engineering Importance

Feature engineering stands as a critical phase in shaping the performance of machine learning models. It involves the thoughtful selection, modification, or creation of features from raw data, enabling algorithms to leverage the most relevant information for predictive analysis. The process can be likened to an artist meticulously curating their palette before embarking on a masterpiece.

The importance of feature engineering cannot be overstated models trained on robust features exhibit enhanced predictive performances. Thoughtful feature engineering aligns closely with domain knowledge, as integrating expert insights into the modeling process greatly enhances data interpretation capabilities. For instance, transforming continuous variables into categorical bins can reveal vital segments influencing model outputs.

Furthermore, effective feature engineering reduces the risk of overfitting. By concentrating on the most impactful features, models maintain simplicity and generalizability, critical for real-world applications where unseen data often deviates from training sets.

Another cornerstone of feature engineering is its iterative nature. As models undergo training and evaluation, ongoing assessments allow engineers to refine and adjust features continuously. This cycle of reflection and refinement ensures models stay aligned with evolving data dynamics.

Automation is revolutionizing the facet of feature engineering, facilitated by tools and libraries designed to streamline feature extraction and transformation processes. This trend promises to make machine learning more accessible and efficient widening participation in the field.

Thus, feature engineering plays an indispensable role in enhancing model interpretability, accuracy, and robustness across diverse applications, including finance, healthcare, and marketing.

Algorithms and Models

At the heart of machine learning lies a myriad of algorithms, each tailored for specific tasks and conditions. Choosing the appropriate algorithm for a given problem is akin to selecting the right tool for a construction job using the wrong tool can complicate the task or even thwart the project altogether.

Regression algorithms are designed for predicting continuous outcomes. For instance, linear regression fits the simplest relationship across data points, while more complex methods like regularized regression (e.g., LASSO and Ridge regression) help prevent overfitting in more intricate datasets.

In contrast, classification algorithms categorize data into discrete classes. Techniques like logistic regression quantify probabilities for binary outcomes, whereas Support Vector Machines (SVM) excel in high-dimensional spaces by finding the optimal hyperplane to delineate classes. Decision Trees punctuate this category, offering interpretable models based on hierarchical decision rules, and ensemble methods such as Random Forests combine multiple models, enhancing performance and reducing noise.

Exploring the realm of clustering algorithms, we observe techniques employed primarily in unsupervised learning contexts. K-means and hierarchical clustering are pivotal tools for identifying groupings in unlabeled data, enabling practitioners to derive meaningful insights from datasets without pre-existing labels.

As we advance into deep learning, we encounter neural networks and their multi-layer architectures, allowing remarkable learning capabilities from vast amounts of data. These networks have gained prominence for their effectiveness in tasks such as image recognition and natural language processing.

Thus, the landscape of machine learning algorithms is rich and diverse, with each serving unique purposes and exhibiting distinct performances based on datasets, objectives, and contexts.

Common Algorithms Used in ML

A thorough grasp of common machine learning algorithms allows practitioners to navigate the diverse landscape of model selection effectively. Algorithms can be broadly classified into categories depending on whether they tackle regression, classification, clustering, or reinforcement tasks.

Regression Algorithms:

- Linear Regression: Straightforward and efficient for linear relationships, estimating the relationship between dependent and independent variables.

- Regularized Regression: Techniques like LASSO and Ridge regression add penalties to reduce the risk of overfitting, particularly suitable for high-dimensional datasets.

- Regression Trees: Decision trees can model relationships by segmenting data based on feature values, providing interpretable outputs.

Classification Algorithms:

- Logistic Regression: Applies the logistic function to estimate binary outcomes, useful for classifying categorical variables.

- Support Vector Machines (SVM): Finds the optimal boundary to separate data points in high-dimensional space, effectively dealing with non-linear relationships through kernel functions.

- Decision Trees: Models decisions through simple rules derived from feature analysis, widely utilized for various applications.

Clustering Algorithms:

- K-Means Clustering: Partitions data into K distinct clusters based on distance metrics, renowned for its simplicity and effectiveness.

- DBSCAN: Detects clusters of varying shapes and sizes while handling noise effectively, making it useful for complex datasets.

- Hierarchical Clustering: Constructs a tree-like structure of clusters, facilitating a comprehensive understanding of data distributions.

Deep Learning Algorithms:

- Deep Neural Networks: Comprising multiple layers of neurons, these models can capture intricate patterns, especially useful in areas such as image and text analysis.

In summary, there exists a diverse array of machine learning algorithms, each engineered for tackling specific problems while demonstrating strengths and drawbacks inherent to their design principles and application contexts.

Comparison of ML Models

When choosing an appropriate machine learning model, a systematic comparison is essential to ensure optimal application performance. Several vital factors must be acknowledged as they exert influence over model selection decisions.

- Performance Metrics: Evaluating models through metrics such as accuracy, precision, recall, and F1-score enables practitioners to gauge their reliability. Each metric provides insights tailored for different tasks accuracy indicates the proportion of correct predictions, while precision and recall assess performance in cases of imbalanced datasets.

- Overfitting vs. Underfitting: An effective model achieves a balance between complexity and simplicity. While ensemble methods often mitigate overfitting found in simpler models like decision trees, excessively complex models might lead to underfitting in simpler scenarios.

- Scalability: The capacity of a model to handle vast datasets is critical for many real-world applications. While certain algorithms such as SVMs are less scalable due to their computational intensity, ensemble methods like Random Forests tend to manage larger datasets more efficiently.

- Data Type Suitability: Different algorithms exhibit varying effectiveness depending on data characteristics. High-dimensional datasets may benefit from algorithms like SVMs and decision trees that can exploit feature diversity effectively, while K-Means clustering may require prior data processing to deliver useful results.

To summarize, the comparison of machine learning models necessitates an evaluation of performance metrics, scalability, and data compatibility, allowing practitioners to ascertain the most suitable approach for their unique problems.

Evaluation Metrics for ML Models

Evaluating machine learning models is a fundamental step that dictates the success of any predictive system. Defining the right evaluation metrics is crucial as they provide the necessary insights to determine model effectiveness and inform continuous improvements. Here, we delve into pivotal evaluation metrics catering to different types of machine learning tasks.

- Accuracy: The proportion of true results both classified correctly among all predictions serves as a primary metric but may mislead when dealing with imbalanced datasets.

- Precision: This metric conveys the ratio of true positives to predicted positives, making it critical in situations where reducing false positives is paramount for instance, in spam classification.

- Recall (Sensitivity): Calculating the ability of a model to identify all relevant examples, recall is important when false negatives carry substantial repercussions, such as in medical diagnosis.

- F1-Score: The harmonic mean between precision and recall, this score contextualizes balance in datasets with uneven distributions, providing a more holistic view of model performance.

- ROC Curve and AUC: The Receiver Operating Characteristic (ROC) curve conveys model performance in distinguishing classes across varying thresholds, with the Area Under the Curve (AUC) quantifying its discriminatory power.

- Kappa Statistic: A measure of agreement between predicted and actual classifications adjusted for chance, offering deeper insights into categorical predictions.

- Mean Square Error (MSE): Critical in regression tasks, this metric captures the average squared difference between predictions and actual outcomes, facilitating enhanced evaluation of predictive accuracy.

- Confusion Matrix: A visual tool that unravels the complexities of classification performance, revealing true positives, false positives, and other crucial elements necessary for deriving other metrics.

By employing these metrics thoughtfully, practitioners can foster an environment conducive to recognizing strengths and weaknesses in their models, thus enabling informed decisions about future adjustments.

Model Selection Strategies

Opting for the right machine learning model entails more than just a basic understanding of algorithms it requires an amalgamation of knowledge, evaluation, and strategic planning. Below are some model selection strategies that can guide practitioners in selecting the optimal path for their machine learning projects.

- Cross-Validation: This technique involves partitioning the dataset into subsets, employing some for training while validating others, enabling practitioners to assess model performance consistently across different configurations, thus enhancing reliability.

- Grid Search: A systematic approach to hyperparameter tuning, grid search evaluates model performance across a pre-defined range of parameters, facilitating the identification of optimal performances based on created configurations.

- Random Search: Offering a more efficient alternative to grid search, random search randomly samples from specified ranges across hyperparameters rather than exhaustively evaluating all possibilities, significantly speeding up the search.

- Feature Selection: Determining the relevance of features for model training with methods such as Recursive Feature Elimination (RFE) not only improves model performance but reduces complexity and potential overfitting.

- Ensemble Methods: Combining multiple models often results in performance improvement beyond any individual model, leveraging diversity through techniques like bagging, boosting, or stacking to enhance prediction strength.

- Metric-Driven Selection: Aligning model evaluation with business objectives enables organizations to select models based on an understanding of the costs associated with different types of errors, ensuring decisions encapsulate real-world implications.

In summary, the selection of models in machine learning is a multifaceted journey that entails rigorous evaluation, systematic approaches, and strategic thinking ensuring that practitioners can navigate the myriad of available options effectively.

Applications of Machine Learning

The application of machine learning extends far beyond theoretical frameworks it touches diverse industries, solving real-world challenges and unlocking unprecedented opportunities. By understanding its multifaceted applications, professionals can tailor their expertise to exploit machine learning’s capabilities optimally.

- Manufacturing: In the realm of manufacturing, machine learning algorithms harness large data sets to streamline production processes, enhance predictive maintenance, and optimize supply chains. For instance, predictive analytics anticipate equipment failures, minimizing costly downtimes.

- Healthcare: ML revolutionizes healthcare through predictive diagnostics and personalized medicine applications. Algorithms analyze patient data and medical images to identify anomalies, improving early detection rates and paving the way for customized treatment plans.

- Finance: Predictive models assess risks in financial transactions, detecting anomalies indicative of fraud. ML aids in refining credit scoring algorithms, enhancing loan approval processes based on comprehensive data assessments.

- Marketing: Machine learning has transformed marketing by facilitating customer segmentation and targeted advertising strategies. Businesses utilize algorithms to analyze consumer behavior and demographics, delivering personalized product recommendations and enhancing brand loyalty.

- Transportation: Self-driving vehicles rely heavily on machine learning to navigate terrains and make real-time decisions. Additionally, ML algorithms optimize logistics and supply chains, improving delivery efficiency through dynamic routing adjustments.

- Cybersecurity: Machine learning fortifies cybersecurity by identifying and responding to threats in real-time. Algorithms analyze network patterns and user behaviors to detect breaches before they escalate.

- Telecommunications: This sector benefits from ML in traffic management and predictive maintenance, where analyzing data records allows operators to manage outages proactively, enhancing service reliability.

The exponential growth of machine learning applications signifies its potential for driving innovation and efficiency across numerous sectors, providing businesses with powerful tools to navigate complexities and challenges effectively.

Industry Applications

The versatility of machine learning translates into numerous innovative applications across various industries, highlighting its crucial role in modernizing and optimizing established practices. Below are some notable implementations in key sectors.

- Manufacturing and Industry 4.0: In the wake of Industry 4.0, manufacturers leverage ML to enhance production capabilities through predictive maintenance and asset optimization. By analyzing sensor data, manufacturers can anticipate asset failures, reduce operational downtime, and improve overall efficiency.

- Healthcare: Machine learning contributes significantly to advancements in diagnostics and patient care. Appropriate algorithms detect diseases from medical imaging and wearable sensor data, reducing diagnostic errors and refining treatment plans through precision medicine.

- Finance: In the finance sector, machine learning drives efficient fraud detection and risk management. Banks utilize predictive models to analyze transaction patterns, identify potential threats, and enhance decision-making processes tied to lending and investment strategies.

- Retail sales and Marketing: Retailers employ machine learning for product recommendations and inventory management. Algorithms analyze past purchasing behaviors and trends, allowing businesses to tailor promotions and stock according to customer preferences.

- Telecommunications: In telecommunications, ML optimizes network performance and customer service. Predictive analytics help providers manage maintenance schedules and allocate resources effectively, targeting interventions before substantial service interruptions occur.

- Agriculture: Machine learning algorithms assess soil conditions, weather patterns, and crop health, enabling farmers to make data-guided decisions to optimize yield. Precision agriculture is on the rise, utilizing these insights to enhance productivity while mitigating waste.

Through these varied applications, machine learning revolutionizes traditional industries, unlocking new potential and creating pathways for innovation that resonate across global markets.

Case Studies in Machine Learning

Machine learning has produced transformative case studies that showcase its versatility and efficacy across different industries. Let’s explore some notable examples that illustrate the power of machine learning applications.

- Amazon Web Services (AWS): Amazon employs machine learning to optimize its operations and enhance customer experience. Their recommendation engine analyzes past purchasing behavior to provide tailored product suggestions, significantly increasing customer engagement and sales.

- Tesla’s Autopilot: Tesla utilizes machine learning extensively in its Autopilot system, enabling vehicles to navigate autonomously. The system learns from vast amounts of real-world driving data to improve decision-making, enhancing safety and navigation capabilities.

- Netflix: Leveraging machine learning, Netflix employs sophisticated algorithms to analyze user behavior and preferences. This analysis powers their recommendation system, enabling users to discover new content tailored to individual viewing habits, driving user engagement and satisfaction.

- Google Photos: Using machine learning algorithms, Google Photos offers intelligent image recognition capabilities. Users can search for images based on keywords, allowing for effortless organization and retrieval of photos based on identified objects or people.

- Spotify: The music streaming service employs machine learning to curate personalized playlists and make song recommendations based on listeners’ habits. These insights have proven crucial in enhancing user experience and retention.

These case studies underscore the immense value machine learning brings to organizations, driving efficiency, innovation, and personalized experiences while paving the way for future advancements across various fields.

Emerging Trends in ML Applications

The landscape of machine learning continues to evolve at a rapid pace, introducing new trends that redefine its applications. Several emerging trends showcase the transformative potential of machine learning across industries.

- Increased Automation: Automation remains at the forefront of machine learning advancements, enhancing efficiency in various processes, from customer service chatbots to automated data analysis tools.

- Explainable AI (XAI): As the implications of machine learning grow, industries demand transparency in AI models. Explainable AI seeks to create models that can elucidate their decision-making processes, boosting trust and accountability in machine learning systems.

- Federated Learning: This technique enables decentralized model training on multiple devices, enhancing privacy and data security. Organizations can develop robust models without compromising sensitive individual data while facilitating collaboration across entities.

- AI-Enhanced Security: The cybersecurity landscape is shifting towards AI-powered solutions that provide real-time threat detection and response capabilities, making machine learning indispensable in combating evolving cyber threats.

- AI Democratization: With the proliferation of user-friendly tools and platforms, AI democratization aims to empower a broader audience to engage with AI technologies, irrespective of technical expertise. This trend fosters innovation and accessibility.

As these trends materialize, they manifest new opportunities and challenges for organizations, ultimately shaping the future landscape of machine learning applications across diverse disciplines.

Challenges and Limitations

Despite the remarkable advancements and applications of machine learning, several challenges and limitations persist that must be addressed for successful implementations. Acknowledging these obstacles helps researchers and practitioners devise solutions that enhance the effectiveness of machine learning systems.

- Data Quality and Availability: High-quality data is essential for training effective machine learning models. However, challenges persist, including limited access to relevant datasets, data inconsistencies, and issues related to labeling quality and accuracy. These constraints can compromise model performance and hinder predictions.

- Overfitting and Underfitting: Striking a balance between model complexity and generalizability is crucial. Overfitting where a model learns to identify noise rather than underlying patterns leads to poor performance on unseen data. Conversely, underfitting occurs when a model is too simplistic, failing to capture important trends. Both issues underscore the importance of employing appropriate techniques for model validation and evaluation.

- Ethical Considerations: The rapid evolution of machine learning raises ethical questions regarding data privacy, algorithmic bias, and accountability in decision-making processes. Ensuring fairness and transparency in ML practices becomes increasingly critical, as biased algorithms may perpetuate societal inequalities.

- Interpretability and Transparency: Many machine learning models operate as black boxes, making it difficult to understand their decision-making processes. This opacity poses challenges for trust and regulatory compliance, emphasizing the need for models that produce interpretable and explainable outputs.

By addressing these challenges and embracing best practices, practitioners can forge a path toward more effective and ethical machine learning implementations that resonate with real-world goals.

Data Quality and Availability Issues

Data quality and availability issues represent significant challenges in the realm of machine learning, directly influencing model performance and reliability. Several key aspects underscore the importance of this issue:

- High Collection Costs: Acquiring high-quality datasets can be time-consuming and expensive. Organizations often grapple with constraints related to resources, funding, and manpower, limiting their ability to collect and curate extensive datasets for training models.

- Data Labeling Difficulties: Quality labeled data is essential for supervised learning, but the labeling process can be laborious and prone to inaccuracies. Human oversight and subjective interpretations can lead to inconsistent labeling, undermining model training.

- Inconsistent Data Quality: Datasets often contain noise, duplicates, and irrelevant features, creating inconsistencies. Establishing rigorous data quality assurance practices becomes a necessity to ensure models receive clean and relevant input.

- Limited Access: Legal and ethical restrictions often impede data sharing between organizations especially sensitive information related to individuals or proprietary datasets. These constraints can stifle collaboration and limit opportunities for comprehensive data insights.

- Data Drift: Changes in the environment from which data is collected can lead to data drift, altering the characteristics of underlying data distributions. Training models on outdated information may hinder their effectiveness in rapidly changing contexts.

To mitigate these issues, organizations must prioritize data governance frameworks and robust quality control mechanisms, fostering an environment conducive to successful machine learning applications.

Overfitting and Underfitting Problems

Overfitting and underfitting are common challenges in machine learning that can dictate a model’s effectiveness and generalization. Understanding and addressing these problems are critical to ensuring successful machine learning implementations.

- Overfitting:

- Definition: Overfitting occurs when a machine learning model learns the training data too well, capturing noise and fluctuations rather than the true underlying patterns. This results in high accuracy on training datasets but poor performance on validation or test datasets, indicating a failure to generalize.

- Challenges: Complex models with numerous parameters are more prone to overfitting, especially when trained on small datasets with limited variability. Additionally, excessive reliance on irrelevant features complicates the learning process and diminishes model reliability.

- Solutions: To combat overfitting, practitioners can simplify models, introduce regularization techniques (such as L1 and L2 regularization), and utilize cross-validation to gauge performance across different configurations. Moreover, gathering more data, employing data augmentation, and feature selection techniques can bolster the model’s generalization abilities.

- Underfitting:

- Definition: Conversely, underfitting occurs when a model is too simplistic to capture the underlying relationships in the data, leading to inadequate performance on both training and test datasets. A model facing this challenge will exhibit high bias and low variance.

- Challenges: Models with too many constraints or overly stringent assumptions limit their capacity to learn effectively from the data. Additionally, neglecting relevant features can result in underfitting, leading to inadequate predictive capabilities.

- Solutions: Addressing underfitting often requires selecting more complex models, relaxing regularization constraints, increasing available training data, and extending the duration of training to allow the model to learn from varied patterns.

Overfitting and underfitting underscore the vital balancing act of model selection and training ensuring practitoners cultivate robust machine learning models that maintain their predictive power amid the complexity of real-world data.

Ethical Considerations in ML

As machine learning technologies continue to permeate everyday life, ethical considerations must guide their development and implementation. Addressing ethical dilemmas in machine learning synchronizes innovation with responsibility, ensuring technologies serve societal progress transparently and fairly.

- Algorithmic Bias: A prevalent concern revolves around the biases encoded in data and algorithms, leading to discriminatory outcomes that can disproportionately affect marginalized groups. For example, biased training data may yield unfair treatment in hiring algorithms or credit assessments, perpetuating existing disparities.

- Transparency and Accountability: With many machine learning models functioning as black boxes, understanding their decision-making processes becomes challenging. This opacity hinders accountability and necessitates greater emphasis on interpretability, compelling practitioners to develop models that offer insights into how decisions are made.

- Privacy and Data Protection: The extensive datasets required for training machine learning models often comprise personal information. Navigating privacy laws, securing user consent, and adhering to data protection regulations are crucial responsibilities that organizations must uphold, safeguarding individual rights.

- Informed Consent: Organizations must ensure that users are fully informed regarding data usage and algorithmic decision-making processes. Initiatives to increase transparency around how data is collected, processed, and utilized will cultivate trust between users and machine learning applications.

- Ethical AI Development: It is vital for organizations to establish ethical frameworks governing AI development outlining the responsibilities of developers, stakeholders, and users. Integrating ethical considerations from the beginning fosters accountability and aligns technological progression with societal values.

In summary, recognizing and addressing ethical considerations in machine learning is paramount for building responsible AI systems. By championing fairness, accountability, and transparency, practitioners can ensure that machine learning advancements benefit society while minimizing potential harms.

Resources for Learning Machine Learning

As individuals delve into the field of machine learning, a wealth of resources is available to guide their learning journey. Let’s explore some recommended textbooks, online courses, and other valuable materials that can facilitate skill development in this domain.

Recommended Textbooks and Publications

- Machine Learning Fundamentals by Milly KC

- Description: This comprehensive textbook covers essential machine learning concepts, algorithms, and applications, providing a solid foundation for learners.

- Link: Amazon Link

- Pattern Recognition and Machine Learning by Christopher M. Bishop

- Description: A detailed introduction to the fields of pattern recognition and machine learning, this textbook covers a variety of models and methodologies.

- Link: Springer Link

- Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow by Aurélien Géron

- Description: A practical guide employing numerical libraries for developing machine learning applications, making it suitable for hands-on learners.

- Link: O’Reilly Link

- Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville

- Description: A comprehensive resource on deep learning, covering both theoretical frameworks and applications in various domains.

- Link: Deep Learning Book

- The Elements of Statistical Learning by Trevor Hastie, Robert Tibshirani, and Jerome Friedman

- Description: A foundational text linking statistics with machine learning principles, detailing statistical learning techniques and methods.

- Link: Web Link

Online Courses and Certifications

- Coursera – Machine Learning by Andrew Ng

- Description: This foundational course, taught by Andrew Ng, provides an excellent introduction to machine learning concepts and applications.

- Link: Coursera Machine Learning

- edX – Introduction to Artificial Intelligence (AI)

- Description: Explores AI fundamentals, including machine learning principles, offering a comprehensive approach to understanding the field.

- Link: edX AI Course

Additional Resources

- Kaggle

- Description: A community platform for data science competitions, offering abundant datasets and collaborative solutions for practicing machine learning skills.

- Link: Kaggle

- Towards Data Science (Medium)

- Description: An educational platform with numerous articles, tutorials, and case studies focused on machine learning and data science topics.

- Link: Towards Data Science

These resources empower individuals seeking to build a strong foundation in machine learning, facilitating exploration of concepts, methodologies, and practical applications within the field.

Useful Tools and Software for ML

Machine learning practitioners have access to powerful tools and libraries that enhance the development and implementation of machine learning solutions. Below are some notable tools and software that facilitate various aspects of machine learning.

- TensorFlow

- Description: An open-source library that simplifies numerical computations and accelerates machine learning model development, widely employed for deep learning applications.

- Keras

- Description: A high-level neural networks API that runs on top of TensorFlow, Keras streamlines deep learning model creation and deployment.

- PyTorch

- Description: An advanced open-source machine learning library, recognized for its dynamic computation graph feature, making it ideal for developing deep learning models.

- scikit-learn

- Description: A widely used Python library that provides efficient tools for data mining and analysis, particularly focused on traditional machine learning algorithms.

- Jupyter Notebook

- Description: An open-source web application for creating and sharing documents that contain live code, equations, visualizations, and narrative text, enriching the learning experience.

- Apache Spark

- Description: A unified analytics engine designed for big data processing, featuring built-in modules for machine learning, stream processing, and more.

By integrating these tools into their workflows, machine learning practitioners can streamline their coding processes, enhance productivity, and develop robust models tailored to specific applications.

Community and Collaboration

The significance of community and collaboration in machine learning cannot be emphasized enough, as they foster knowledge sharing, innovation, and increased skill development. Building connections within the community enhances the overall learning experience and promotes collaborative efforts among professionals.

Importance of Networking in ML

- Community Engagement: Online platforms and communities facilitate returns of knowledge sharing among individuals interested in machine learning, providing opportunities for collaboration and collective innovation. These communities lower barriers to participation and support inclusive engagement.

- Networking Benefits: Engaging within the ML community paves the way for exchanging ideas, resources, and best practices, thereby enhancing learning experiences and generating fresh insights. Mutual collaborations frequently yield innovative solutions capable of addressing complex challenges.

- Data Sharing and Resource Access: Collaborative networks simplify access to essential datasets and resources, allowing individuals and teams to experiment with machine learning methodologies in a supportive environment.

- Skill Development: Being part of a collaborative community fosters skill improvement through peer interactions, mentorship, and knowledge sharing, enabling individuals to gain new perspectives and broaden their expertise.

- Enhanced Research and Development: The interdisciplinary nature of machine learning encourages collaboration across fields, driving innovation and leading to comprehensive solutions by integrating insights from various disciplines.

Contribution to Open Source Projects

- Purpose and Passion: Contributing to open source projects serves to not only improve software and coding capabilities but also bolsters individual skill sets while advancing the machine learning community. Having a clear motivation for contributing aligns efforts with projects that resonate with personal interests.

- Finding Projects: Platforms like GitHub host numerous open source projects open to contributions from individuals. Actively seeking out projects that spark interest, particularly those labeled as “good first issues,” can simplify the initial stages of contribution.

- Types of Contributions: Contributions can vary, including coding, bug fixes, documentation improvements, or concept validations. Identifying a specific area of expertise or interest streamlines the decision-making process around suitable contributions.

- Engaging with Community: Building connections with community members can foster collaboration, providing support and enhancing knowledge exchanges related to machine learning techniques and practices.

Participating in ML Competitions

Participating in machine learning competitions can serve as an exciting avenue for hands-on learning and skill enhancement. Here are some essential aspects to consider:

- Overview and Opportunities: Various platforms, like Kaggle and the ML Olympiad, host competitions in which participants tackle real-world data challenges, applying machine learning techniques to derive insights and solutions.

- Diverse Challenges: Competitions present an array of problems, from predicting healthcare outcomes to image classification tasks. These scenarios require participants to leverage a variety of machine learning models and techniques, facilitating extensive learning experiences.

- Benefits of Participation: Engaging in competitions provides exposure to real datasets, challenges participants to innovate, and fosters collaboration. Each competition allows individuals to join teams, encouraging collective problem-solving and collaboration among peers.

- Getting Started: Aspiring participants can join by exploring competitions suitable to their skill level and remaining tuned for upcoming events via social media or platform announcements.

By contributing to open source projects and participating in competitions, aspiring machine learning practitioners can broaden their skill sets, connect with professionals, and contribute to the collective knowledge within the field.

Future Directions in Machine Learning

The field of machine learning is in constant flux, driven by innovations that promise to reshape various industries. Several key future directions are emerging that highlight the transformative impact of machine learning.

Innovations in Machine Learning Techniques

- Generative Adversarial Networks (GANs): GANs are gaining prominence by revolutionizing data generation. Their ability to create realistic synthetic data holds immense potential across sectors, from fashion design to drug discovery.

- Transfer Learning: The concept of transfer learning enables models to leverage knowledge gained from one problem domain to solve another. This will result in reduced training times and increased efficiencies in various applications.

- Explainable AI (XAI): As concerns about accountability and transparency in AI grow, the development of interpretative models is crucial. Innovations in XAI will enhance user trust by elucidating decision-making processes within machine learning systems.

- Automated Machine Learning (AutoML): Autonomously selecting optimal models and hyperparameters lowers entry barriers for non-experts, streamlining the machine learning workflow and enabling wider adoption.

- Federated Learning: This innovative approach emphasizes privacy and data security by permitting participants to collaboratively train models without sharing sensitive data, significantly improving compliance with privacy regulations.

Through these innovations, machine learning is on the brink of massive transformation, influencing how businesses harness data to drive efficiencies, uncover insights, and foster innovation.

Predictions for ML Growth Areas

- Augmented Intelligence: The fusion of human cognitive abilities with machine intelligence will maximize productivity across industries, estimated to grow significantly within the coming years.

- Quantum Computing: Expectations for breakthroughs in quantum computing by the mid-2020s promise advancements in data processing capabilities, creating new opportunities for solving complex problems with machine learning.

- AI Democratization: The trend towards democratizing AI will foster innovation across small and large enterprises, enabling businesses of all sizes to tap into machine learning technologies, confidently competing in the marketplace.

- AI Ethics Standards: Organizations are anticipated to adopt comprehensive ethical frameworks, ensuring responsible AI practices that prioritize fairness and accountability, ultimately shaping public perception.

- Continuous Learning Models: Learning models are projected to continually evolve, improving employee training and ML system efficacy setting the stage for enhanced workplace automation powered by AI.

By staying attuned to these future directions, professionals in the machine learning space can prepare anticipated trends while contributing to shaping the evolving narrative of AI technology.

Conclusion

Machine learning has transcended the confines of theoretical constructs and entered the fabric of everyday applications, fundamentally altering industries and setting new trajectories for innovation. Understanding its core principles, navigating its challenges, and harnessing its potential through ethical considerations are crucial for professionals and practitioners alike. As evidenced by diverse case studies and emerging trends, machine learning continues to drive creative solutions to complex problems, reflecting a future brimming with possibilities. By embracing the fundamental concepts explored in this article, individuals can engage meaningfully in the dynamic landscape of machine learning, paving their paths toward success in an interconnected world where data reigns supreme.

Frequently Asked Questions:

Business Model Innovation: We use a group buying approach that enables users to split expenses and get discounted access to well-liked courses.

Despite worries regarding distribution strategies from content creators, this strategy helps people with low incomes.

Legal Aspects to Take into Account: Our operations’ legality entails several intricate considerations.

There are no explicit resale restrictions mentioned at the time of purchase, even though we do not have the course developers’ express consent to redistribute their content.

This uncertainty gives us the chance to offer reasonably priced instructional materials.

Quality Assurance: We guarantee that every course resource you buy is exactly the same as what the authors themselves are offering.

It’s crucial to realize, nevertheless, that we are not authorized suppliers. Therefore, the following are not included in our offerings:

– Live coaching sessions or calls with the course author.

– Entry to groups or portals that are only available to authors.

– Participation in closed forums.

– Straightforward email assistance from the writer or their group.

Our goal is to lower the barrier to education by providing these courses on our own, without the official channels’ premium services. We value your comprehension of our distinct methodology.

Be the first to review “Machine Learning Fundamentals By Milly Kc” Cancel reply

You must be logged in to post a review.

Reviews

There are no reviews yet.